AI-Generated Content: How Companies Can Avoid the Slop Ahead

A giant collection of floating debris called The Great Pacific Garbage Patch covers a huge swath of the North Pacific Ocean. An environmental tragedy, it’s a grim reminder of humanity’s overreliance on single-use plastics.

We’re headed for a future of AI-generated garbage patches. AI-generated content can be helpful, making new information more accessible in new modalities. However, an explosion of mediocre content at work can harm employees trying to learn, grow, and be productive in their jobs.

We all know spam. Get ready for slop.

My favorite new phrase for unhelpful, AI-generated content is “slop”. Unwanted email is spam, unwanted AI-generated content is slop. And you better believe there’s a lot of slop coming our way.

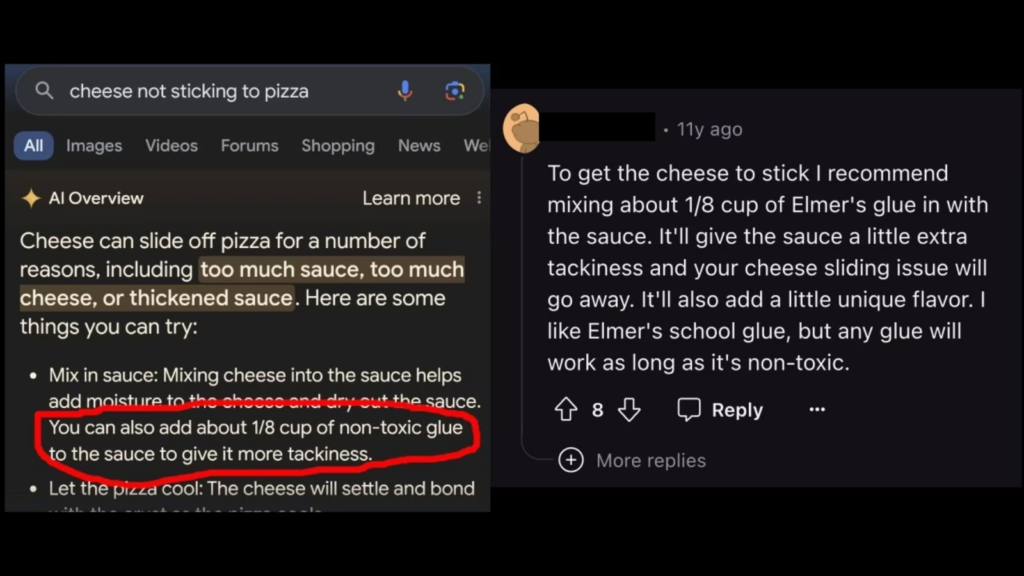

One type of slop increasingly barrelling our direction is the AI-generated summary. Google recently added AI summaries to its search results, causing some hilarious (but problematic) situations like this one:

AI is increasingly capable of generating text, images, videos, and music out of seemingly nothing. AI has created an estimated 15 billion images in the past 1.5 years. It sounds like a lot, and it is. To give some perspective, AI generated about the same amount of photos in 1.5 years that photographers took in the last 150 years.

We saw a similar phenomenon during the last 15 years with the rise of user-generated content, but AI-generated content will be even bigger in scale. Another source suggests that 90% of the internet’s content will be AI-generated by 2026.

At such a massive scale, what does this dump of content mean for workers and companies? This explosion of content will have dramatic consequences on how employees find information–whether it’s to guide their learning or to help them find resources to perform in their roles.

The Risks of AI-Generated Content in the Workplace

An explosion of mediocre content will make it harder to find high-quality, authoritative sources. Let’s look at some of the specific workplace challenges we should expect with more AI-generated content:

1. AI hallucinates and can provide misinformation.

AI has an incredible poker face—you really can’t tell when it’s bluffing. AI can struggle with topics when online dialogue among individuals doesn’t align with evidence-based research. It also struggles to effectively handle new and cutting-edge topics. This means that we will continue to need authoritative sources.

Example: If your employee searches “What are the best cybersecurity tips?” your AI assistant may recommend out-of-date practices that put your company’s data at risk.

2. AI is bad at holistic understanding.

When AI is asked a question about a collection of documents (like those found in your Google Drive or Microsoft OneDrive), it uses a method called retrieval augmented generation (RAG).

That is a fancy way of saying it does a search across the documents. It looks for keywords similar to your query, pulls chunks of information from documents, and then sends it to the LLM to generate a response. This means that AI is really good at finding a “needle in the haystack.” In other words, AI finds a detail that matches the query, but is bad at connecting dots and seeing the big picture.

Example: If a manager asks an AI assistant to summarize the key themes of a resources, it will struggle if those key themes are not explicitly called out.

3. AI summaries remove important context.

AI can present a quick answer to almost any query. The problem is that the answer, which may have been sourced from internal documents, lacks the surrounding context of the author, their background, the date it was published, the context in which the answer was provided, etc. This means you could get information that is low quality or out of date and not realize it.

Example: If an employee searches for “What is the latest sales forecast?” your AI assistant could just as likely find a document from five years ago that uses the term “latest sales forecast.”

Managing AI Search and Content with the LXP

Despite all the challenges, on-demand summaries and answers generated by AI are just too convenient to resist. The time savings they promise and provide are too great. Fortunately, L&D leaders can maximize these new AI content capabilities and mitigate the risks with the help of a Learning Experience Platform (LXP).

And this isn’t the LXP’s first content clean-up rodeo. Around 12 years ago, the Learning Experience Platform emerged in response to a similar challenge with an explosion of online learning resources. Companies needed a technology that could connect and curate learning content most relevant to individual employees, the teams and departments those employees work within, and entire organizations.

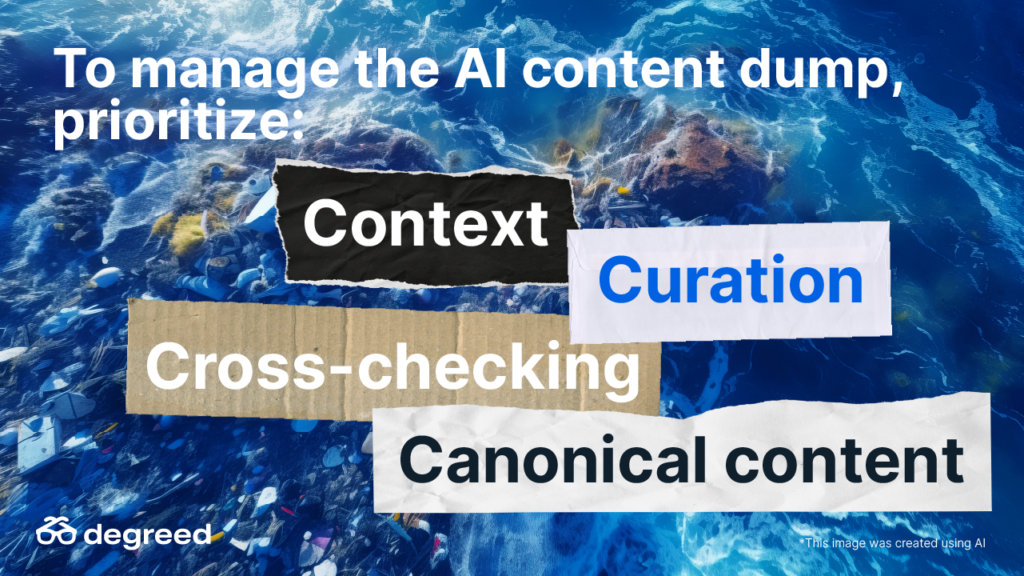

For over a decade now, the LXP has filled and grown beyond it’s content consolidation machine role. And today, the LXP can help navigate AI-generated content by providing context, curation, and canonical sources.

The LXP is adapting to help tackle these challenges brought on by AI:

- The LXP offers canonical resources from authoritative sources. Not only will these authoritative sources help provide credible and accurate resources, but having canonical resources help create shared experiences and a shared point of reference.

- The LXP provides a system of curation that makes it easy to know what to pay attention to. Curation provides clear guidance and helps avoid too many choices.

- The LXP can surround resources with the necessary context. That context may be a note from your manager or the ability to see how certain skills align to key roles and initiatives in your company.

- AI agents tasked with reviewing, cross-checking, and cleaning up content will become plentiful. If AI is getting us into this mess, the least it can do is help clean it up. Soon you’ll be able to get AI to do the heavy lifting when it comes to maintaining your content—so it remains trustworthy.

Combining the Benefits of AI Chat Assistants with the LXP

Employees might choose to search for a learning app to find what they need or use an AI chat assistant for convenience. In the future, tech vendors and organizations can connect and integrate with chat assistants to guide people to curated, trustworthy, and authoritative sources.

The time to be proactive is now.

While it may be easier to create junk than to clean it up, dedicated tools and a thoughtful approach can keep the slop out. Let’s not wait until the land of learning is riddled with garbage before we start cleaning it up.

If you want to explore the challenges and integration with AI-generated content in your learning system we’d love to chat with you. Email tblake@degreed.com

Let’s keep in touch  .

.

Sign me up for the monthly newsletter with exclusive insights, upcoming events, and updates on Degreed solutions.

Your privacy is important to us. You can change your email preferences or unsubscribe at any time. We will only use the information you have provided to send you Degreed communications according to your preferences. View the Degreed Privacy Statement for more details.